Experts believe that there are certain industries which are deemed as future-proof

Experts believe that there are certain industries which are deemed as future-proof

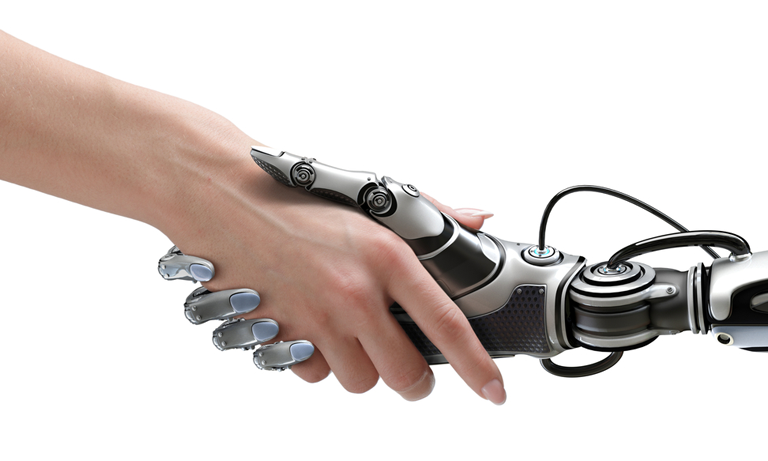

Like it or not, robots are here to stay and we seem to hear about another job that can be done better or more efficiently by our artificially intelligent friends every day. Whilst there are clear benefits to using robots or Artificial Intelligence to do certain jobs, is it always right to assume they are the best choice?

Situations in which a vast amount of data needs to be taken in and ‘crunched’ to extrapolate results would be one example, while using non-humans has been considered a great way of achieving a fairer and more balanced outcome when there is a moral requirement to remove bias or emotions from the equation.

Recruitment companies can ensure that they give every candidate for an open position fair consideration by leveraging the potential of machine learning. This is clearly the way forward for fairer and transparent recruitment practices – or is it?

A report featured in the Financial Times warns that it may be dangerous to rely on machines to be ‘fair’ in the recruitment process. Although it is true that computers or robots do not have the capability to act on their own conscious or unconscious bias, and also have the processing power to analyse the information available about each applicant quicker than any human recruitment consultant, robots still have to rely on data and parameters set by a human to do the job. This means the decisions the robot makes will be totally dependent on the data it has learnt from; at some point, this data will need to be fed to it by a mere mortal.

In effect, robots learn from the patterns already in existence. This was proved in one recent study of the use of robots in the selection process where the biggest indicator that a job seeker would be called in for an interview was age. It turned out that the robots were ‘discriminating’ against the very young and the oldest applicants, dismissing them in favour of an ‘ideal’ age range. More worryingly, gender and ethnicity could also end up being determining factors in the success or otherwise of a job seeker’s application.

Can we really rely on machines to end bias in the recruitment industry? It would appear that expecting a robot created – in the words of Jon Bischke CEO of Entelo – to automate the “20 to 50 things the best recruiters do consciously or unconsciously” will result in a robot that does just this. Put in a different way, why create a robot in the image of a recruiter – albeit the best of the best recruiters – and expect that robot to behave any differently to the recruiter on which it was based?

If a machine learns from a human, it will act like a human – and therein lies the problem.

Join Over 40,000 Recruiters. Get our latest articles weekly, all FREE – SEND ME ARTICLES

Recruiters love this COMPLETE set of Accredited Recruitment & HR Training – View Training Brochure